Show HN: The Roman Industrial Revolution that could have been (Vol 2)

A few months ago I shared the first issue of The Lydian Stone Series here:

https://news.ycombinator.com/item?id=44253083

It's an alternate-history comic about an archaeology student in modern Pompeii who discovers a slate that lets him exchange short messages with a Roman slave a week before the eruption of Vesuvius.

The premise is simple: what happens if someone in the Roman world suddenly gains access to modern scientific knowledge, but still has to build everything using the materials and tools available in 79 AD?

Volume 2 (The Engine of Empire) explores the second-order effects of that idea.

About the process: I write the story, research, structure, and dialogue. The narrative is planned first (acts → scenes → pages → panels). Once a panel is defined, I write a detailed visual description (camera angle, posture, lighting, environment, etc.).

LLMs help turn those descriptions into prompts, and image models generate sketches. I usually generate many variations and manually select or combine the ones that best match the panel.

The bulk of the work is in the narrative design, historical research, and building a plausible technological path the Romans could realistically follow. The AI mostly acts as a sketching assistant.

I'd love feedback on the story direction, pacing, and whether the industrial shift feels believable.

Show HN: Moongate – Ultima Online server emulator in .NET 10 with Lua scripting

I've been building a modern Ultima Online server emulator from scratch. It's not feature-complete (no combat, no skills yet), but the foundation is solid and I wanted to share it early.

What it does today: - Full packet layer for the classic UO client (login, movement, items, mobiles) - Lua scripting for item behaviors (double-click a potion, open a door — all defined in Lua, no C# recompile) - Spatial world partitioned into sectors with delta sync (only sends packets for new sectors when crossing boundaries) - Snapshot-based persistence with MessagePack - Source generators for automatic DI wiring, packet handler registration, and Lua module exposure - NativeAOT support — the server compiles to a single native binary - Embedded HTTP admin API + React management UI - Auto-generated doors from map statics (same algorithm as ModernUO/RunUO)

Tech stack: .NET 10, NativeAOT, NLua, MessagePack, DryIoc, Kestrel

What's missing: Combat, skills, weather integration, NPC AI. This is still early — the focus so far has been on getting the architecture right so adding those systems doesn't require rewiring everything.

Why not just use ModernUO/RunUO? Those are mature and battle-tested. I started this because I wanted to rethink the architecture from scratch: strict network/domain separation, event-driven game loop, no inheritance-heavy item hierarchies, and Lua for rapid iteration on game logic without recompiling.

GitHub: https://github.com/moongate-community/moongatev2

Show HN: WebBridge turns any website into MCP tools by recording browser traffic

I am a 40+-year-old-slightly-techie-middle-aged-man who occasionally writes code to make life easier. I was a developer once - a very long time ago. I am an Engineer by degree and my instinct goes for solution. I work in tech - but on the "_request_ developers what to build" side, not the "actually build it" side. With AI, I am now able to build more.

So I built WebBridge (yes - not so fancy name there). (Well - Claude built it. I directed. Like every PM does.)

What it actually does:

1. You install a Chrome extension 2. You browse to a site you're logged into - your library, your lab results portal, whatever 3. You click Record, do the thing you want to automate, click Stop 4. Claude reads the captured API traffic and generates a permanent MCP server 5. That server works with any MCP client - Claude (Cowork/Code), Cursor, VS Code, Windsurf, Cline, you name it

The whole thing takes about 10 minutes. No code written by you.

This is for non-tech folks who live inside AI providers, who just want to use it and move on. Legal analysts, market researchers, market watchers, marketing and competitive intelligence, and anyone who wants to use a specific website for a specific purpose, repeatedly.

The README has some use cases showcased: "Public Library Search" and "Legal Compliance Auditing."

There may not be an exact equivalent anywhere to what I purpose-built. I'd welcome being proven wrong on that.

Feedback is welcome - that's why I'm posting.

Show HN: Reconstruct any image using primitive shapes, runs in-browser via WASM

I built a browser-based port of fogleman/primitive — a Go CLI tool that approximates images using primitive shapes (triangles, ellipses, beziers, etc.) via a hill-climbing algorithm. The original tool requires building from source and running from the terminal, which isn't exactly accessible. I compiled the core logic to WebAssembly so anyone can drop an image and watch it get reconstructed shape by shape, entirely client-side with no server involved.

Demo: https://primitive-playground.taiseiue.jp/ Source: https://github.com/taiseiue/primitive-playground

Curious if anyone has ideas for shapes or features worth adding.

Show HN: Claude-replay – A video-like player for Claude Code sessions

I got tired of sharing AI demos with terminal screenshots or screen recordings.

Claude Code already stores full session transcripts locally as JSONL files. Those logs contain everything: prompts, tool calls, thinking blocks, and timestamps.

I built a small CLI tool that converts those logs into an interactive HTML replay.

You can step through the session, jump through the timeline, expand tool calls, and inspect the full conversation.

The output is a single self-contained HTML file — no dependencies. You can email it, host it anywhere, embed it in a blog post, and it works on mobile.

Repo: https://github.com/es617/claude-replay

Example replay: https://es617.github.io/assets/demos/peripheral-uart-demo.ht...

Show HN: I open-sourced my Steam game, 100% written in Lua, engine is also open

Homebrew engine https://github.com/willtobyte/carimbo

Show HN: Sqry – semantic code search using AST and call graphs

I built sqry, a local code search tool that works at the semantic level rather than the text level.

The motivation: ripgrep is great for finding strings, but it can't tell you "who calls this function", "what does this function call", or "find all public async functions that return Result". Those questions require understanding code structure, not just matching patterns.

sqry parses your code into an AST using tree-sitter, builds a unified call/ import/dependency graph, and lets you query it:

sqry query "callers:authenticate"

sqry query "kind:function AND visibility:public AND lang:rust"

sqry graph trace-path main handle_request

sqry cycles

sqry ask "find all error handling functions"

Some things that might be interesting to HN:

- 35 language plugins via tree-sitter (C, Rust, Go, Python, TypeScript, Java, SQL, Terraform, and more) - Cross-language edge detection: FFI linking (Rust↔C/C++), HTTP route matching (JS/TS↔Python/Java/Go) - 33-tool MCP server so AI assistants get exact call graph data instead of relying on embedding similarity - Arena-based graph with CSR storage; indexed queries run ~4ms warm - Cycle detection, dead code analysis, semantic diff between git refs

It's MIT-licensed and builds from source with Rust 1.90+. Fair warning: full build takes ~20 GB disk because 35 tree-sitter grammars compile from source.

Repo: https://github.com/verivusai-labs/sqry Docs: https://sqry.dev

Happy to answer questions about the architecture, the NL translation approach, or the cross-language detection.

Show HN: A trainable, modular electronic nose for industrial use

Hi HN,

I’m part of the team building Sniphi.

Sniphi is a modular digital nose that uses gas sensors and machine-learning models to convert volatile organic compound (VOC) data into a machine-readable signal that can be integrated into existing QA, monitoring, or automation systems. The system is currently in an R&D phase, but already exists as working hardware and software and is being tested in real environments.

The project grew out of earlier collaborations with university researchers on gas sensors and odor classification. What we kept running into was a gap between promising lab results and systems that could actually be deployed, integrated, and maintained in real production environments.

One of our core goals was to avoid building a single-purpose device. The same hardware and software stack can be trained for different use cases by changing the training data and models, rather than the physical setup. In that sense, we think of it as a “universal” electronic nose: one platform, multiple smell-based tasks.

Some design principles we optimized for:

- Composable architecture: sensor ingestion, ML inference, and analytics are decoupled and exposed via APIs/events

- Deployment-first thinking: designed for rollout in factories and warehouses, not just controlled lab setups

- Cloud-backed operations: model management, monitoring, updates run on Azure, which makes it easier to integrate with existing industrial IT setups

- Trainable across use cases: the same platform can be retrained for different classification or monitoring tasks without redesigning the hardware

One public demo we show is classifying different coffee aromas, but that’s just a convenient example. In practice, we’re exploring use cases such as:

- Quality control and process monitoring

- Early detection of contamination or spoilage

- Continuous monitoring in large storage environments (e.g. detecting parasite-related grain contamination in warehouses)

Because this is a hardware system, there’s no simple way to try it over the internet. To make it concrete, we’ve shared:

- A short end-to-end demo video showing the system in action (YouTube)

- A technical overview of the architecture and deployment model: https://sniphi.com/

At this stage, we’re especially interested in feedback and conversations with people who:

- Have deployed physical sensors at scale

- Have run into problems that smell data might help with

- Are curious about piloting or testing something like this in practice

We’re not fundraising here. We’re mainly trying to learn where this kind of sensing is genuinely useful and where it isn’t.

Happy to answer technical questions.

Show HN: mTile – native macOS window tiler inspired by gTile

Built this with codex/claude because I missed gTile[1] from Ubuntu and couldn’t find a macOS tiler that felt good on a big ultrawide screen. Most mac options I tried were way too rigid for my workflow (fixed layouts, etc) or wanted a monthly subscription. gTile’s "pick your own grid sizes + keyboard flow" is exactly what I wanted and used for years.

Still rough in places and not full parity, but very usable now and I run it daily at work (forced mac life).

[1]: https://github.com/gTile/gTile

Show HN: Swarm – Program a colony of 200 ants using a custom assembly language

We built an ant colony simulation as an internal hiring challenge at Moment and decided to open it up publicly.

You write a program in a custom assembly-like (we call it ant-ssembly) instruction set that controls 200 ants. Each ant can sense nearby cells (food, pheromones, home, other ants) but has no global view. The only coordination mechanism is pheromone trails, which ants can emit and sense them, but that's it. Your program runs identically on every ant.

The goal is to collect the highest percentage of food across a set of maps. Different map layouts (clustered food, scattered, obstacles) reward very different strategies. The leaderboard is live.

Grand prize is a trip to Maui for two paid for by Moment. Challenge closes March 12.

Curious what strategies people discover. We've seen some surprisingly clever emergent behavior internally.

Show HN: Interactive 3D globe of EU shipping emissions

The article provides an overview of the Seafloor project, which aims to map the entire seafloor using various technologies and data sources. The project aims to create a comprehensive and publicly available dataset to improve our understanding of the world's oceans and their ecosystems.

Show HN: PageAgent, A GUI agent that lives inside your web app

Title: Show HN: PageAgent, A GUI agent that lives inside your web app

Hi HN,

I'm building PageAgent, an open-source (MIT) library that embeds an AI agent directly into your frontend.

I built this because I believe there's a massive design space for deploying general agents natively inside the web apps we already use, rather than treating the web merely as a dumb target for isolated bots.

Currently, most AI agents operate from external clients or server-side programs, effectively leaving web development out of the AI ecosystem. I'm experimenting with an "inside-out" paradigm instead. By dropping the library into a page, you get a client-side agent that interacts natively with the live DOM tree and inherits the user's active session out of the box, which works perfectly for SPAs.

To handle cross-page tasks, I built an optional browser extension that acts as a "bridge". This allows the web-page agent to control the entire browser with explicit user authorization. Instead of a desktop app controlling your browser, your web app is empowered to act as a general agent that can navigate the broader web.

I'd love to start a conversation about the viability of this architecture, and what you all think about the future of in-app general agents. Happy to answer any questions!

Show HN: Graph-Oriented Generation – Beating RAG for Codebases by 89%

LLMs are better at being the "mouth" than the "brain" and I can prove it mathematically. I built a deterministic graph engine that offloads reasoning from the LLM. It reduces token usage by 89% and makes a tiny 0.8B model trace enterprise execution paths flawlessly. Here is the white paper and the reproducible benchmark.

Show HN: Modembin – A pastebin that encodes your text into real FSK modem audio

A fun weekend project: https://www.modembin.com

It's a pastebin, except text/files are encoded into .wav files using real FSK modem audio. Image sharing is supported via Slow-Scan Television (SSTV), a method of transmitting images as FM audio originally used by ham radio operators.

Everything runs in the browser with zero audio libraries and the encoding is vanilla TypeScript sine wave math: phase-continuous FSK with proper 8-N-1 framing, fractional bit accumulation for non-integer sample rates, and a quadrature FM discriminator on the decode side (no FFT windowing or Goertzel), The only dependency is lz-string for URL sharing compression.

It supports Bell 103 (300 baud), Bell 202 (1200 baud), V.21, RTTY/Baudot, Caller ID (Bellcore MDMF), DTMF, Blue Box MF tones, and SSTV image encoding. There's also a chat mode where messages are transmitted as actual Bell 103 audio over WebSocket... or use the acoustic mode for speaker-to-mic coupling for in-room local chat.

Show HN: Mantle – Remap your Mac keyboard without editing Kanata config files

I built Mantle because I wanted homerow mods and layers on my laptop without hand writing Lisp syntax.

The best keyboard remapping engine on macOS (Kanata) requires editing .kbd files which is a pain. Karabiner-Elements is easy for simple single key remapping (e.g. caps -> esc), but anything more wasn’t workin out for me.

What you can do with Mantle: - Layers: hold a key to switch to a different layout (navigation, numpad, media) - Homerow mods: map Shift, Control, Option, Command to your home row keys when held - Tap-hold: one key does two things: tap for a letter, hold for a modifier - Import/export: bring existing Kanata .kbd configs or start fresh visually

Runs entirely on your Mac. No internet, no accounts. Free and MIT licensed

Would love feedback, especially from people who tried Kanata or Karabiner and gave up

Show HN: VaultNote – Local-first encrypted note-taking in the browser

Hi HN,

I built VaultNote, a local-first note-taking app that runs entirely in the browser.

Key ideas:

- 100% local-first: no backend or server - No login, accounts, or tracking - Notes stored locally in IndexedDB / LocalStorage - AES encryption with a single master password - Tree-structured notes for organizing knowledge

The goal was to create a simple note app where your data never leaves your device. You can open the site, enter a master password, and start writing immediately.

Since everything is stored locally, VaultNote also supports import/export so you can back up your data.

Curious to hear feedback from the HN community, especially on:

- the security approach (local AES encryption) - IndexedDB storage design - local-first UX tradeoffs

Demo: https://vaultnote.saposs.com

Thanks!

Show HN: Mog, a programming language for AI agents

I wrote a programming language for extending AI agents, called Mog. It's like a statically typed Lua.

Most AI agents have trouble enforcing their normal permissions in plugins and hooks, since they're external scripts.

Mog's capability system gives the agent full control over I/O, so it can enforce whatever permissions it wants in the Mog code. This is even true if the plugin wants to run bash -- the agent can check each bash command the Mog code emits using the exact same predicate it uses for the LLM's direct bash tool.

Mog is a statically typed, compiled, memory-safe language, with native async support, minimal syntax, and its own compiler written in Rust and its own runtime, also written in Rust, with `extern "C"` so the runtime can easily be embedded in agents written in different languages.

It's designed to be written by LLMs. Its syntax is familiar, it minimizes foot-guns, and its full spec fits in a 3200-token file.

The language is quite new, so no hard security guarantees are claimed at present. Contributions welcome!

Show HN: Best ways to organize research links

The best ways to organize research links are usually the simplest ones. Capture fast. Group by project. Save context. Review regularly. Keep one clear home base. That is the system.

Show HN: Feedster, an RSS/feed reader focused on discovery and agent integration

Hey HN,

I built Feedster for a few reasons:

1. I want to spend more time reading people's blogs, long form content, even more traditional digital publications and less time on social media.

2. RSS readers have typically felt a lot like Apple Mail circa 2010... Unread counts for every feed, everything in its own discrete view, no means for easily discovering new stuff. Not necessarily a bad thing, but it does make reading content feel like _work_ which is directly opposing goal number 1.

3. No one has really rethought the shape of RSS in quite some time (Google Reader is probably the biggest re-think here that I can remember). It's typically been a client that downloads posts from various feeds on the internet. This is simple and it works, but what if there was a different shape that was more communal, more editorial, and was web-based?

4. I haven't seen any RSS-powered AI agent workflows and I wanted to experiment with what that might look like. To start, I built a hosted MCP server that users can authenticate to and give their agents read-only access to their Feedster channels and feeds. I plan to add more functionality here, as it would be very cool to have an agent do a search on Feedster and find other feeds you might be interested in and add them to your channels automatically. Or dump a giant OPML file of feeds into an agent and have it auto add them to your account and categorize them into different channels for you.

You can read more about the background on my blog here: https://p.atrick.org/2026/03/05/introducing-feedster

Thanks for checking it out, any feedback or discussion is appreciated.

Show HN: Argus – VSCode debugger for Claude Code sessions

Show HN: Jido 2.0, Elixir Agent Framework

Hi HN!

I'm the author of an Elixir Agent Framework called Jido. We reached our 2.0 release this week, shipping a production-hardened framework to build, manage and run Agents on the BEAM.

Jido now supports a host of Agentic features, including:

- Tool Calling and Agent Skills - Comprehensive multi-agent support across distributed BEAM processes with Supervision - Multiple reasoning strategies including ReAct, Chain of Thought, Tree of Thought, and more - Advanced workflow capabilities - Durability through a robust Storage and Persistence layer - Agentic Memory - MCP and Sensors to interface with external services - Deep observability and debugging capabilities, including full stack OTel

I know Agent Frameworks can be considered a bit stale, but there hasn't been a major release of a framework on the BEAM. With a growing realization that the architecture of the BEAM is a good match for Agentic workloads, the time was right to make the announcement.

My background is enterprise engineering, distributed systems and Open Source. We've got a strong and growing community of builders committed to the Jido ecosystem. We're looking forward to what gets built on top of Jido!

Come build agents with us!

Show HN: Solace – Mac menu bar app that adapts to the world around you. Finally

macOS gives you three appearance options: Light, Dark, or Auto. Auto follows sunset, which is a surprisingly blunt heuristic. It doesn't account for season (dark mode at 3:50pm in December, light mode at 9pm in June), and it has zero awareness of actual conditions outside. Overcast and dark at 2pm? Your Mac doesn't care.

The deeper problem is that macOS treats appearance, wallpaper, and colour temperature as unrelated settings. You end up stitching together separate tools that don't know about each other, or you just live with the defaults and accept the friction. Solace tries to solve this by treating them as one system. It sits in the menu bar and coordinates sunset and sunrise scheduling, weather-aware dark mode switching, wallpaper sync across displays, and gradual screen warmth -- all driven by the same set of inputs (solar position, local weather, time of day).

The weather-aware piece is the part I haven't found elsewhere. It pulls cloud cover data and triggers dark mode when conditions warrant it, independent of the solar schedule. Right now the threshold is fixed at 75% cloud cover -- making that configurable is the next update.

Built natively with Swift and SwiftUI. All location data stays on-device. No network calls except weather lookups. No analytics. $4.99, one-time. Interested in feedback on the approach, especially from anyone who's tried to solve this problem differently.

Show HN: Tensor Spy: inspect NumPy and PyTorch tensors in the browser, no upload

We needed a side project to give agentic coding a try, and created tensorspy.com together with Junie and ChatGPT 5.2.

Tensor Spy lets you quickly inspect the contents of numpy & pytorch tensors locally (your tensors are not uploaded to any servers).

This is useful to validate your deep learning data pipelines, to check which layers in your diverging model are actually going haywire, and just because it's kind of cool & a lot more convenient for one-off inspections than loading things up in python.

If you work with diffusion models, inspecting the latent space can be quite informative: you want some "noise" in there but it should probably be fairly smooth for your LDM (Latent Diffusion Model) to be able to target it well.

Also, if you haven't looked at your data, it's probably not what you think it is ;)

Basic stats are auto-computed, and any inf/nan values are both counted and rendered with contrasting colors, to help you quickly identify issue hotspots.

The site is free, and our broad intention is to keep it that way (we run a bunch of pro-bono little utility sites in addition to our commercial ones, they're all linked on the about page).

Would love to hear your thoughts, I'm sure there are some stats or utility features we missed, so please give it a spin and let us know!

---

Agentic coding is a brave new world. Three years ago, after the initial rush of ChatGPT's launch, I commented to some friends that "we're standing on the beach and the water just receded". The tsunami is really hitting now. As in: this project took about 2 weeks, and not only would we not have done it without agentic coding, it would have taken months using "traditional methods". With agentic coding, adding .pt/.pth support was basically a single request. And it just worked. Time to adapt yet again.

Show HN: Pg_sorted_heap–Physically sorted PostgreSQL with builtin vector search

The article discusses a PostgreSQL extension called 'pg_sorted_heap' that provides an alternative to the standard B-tree index. It offers improved performance for certain types of queries by storing data in a heap structure sorted by the indexed column.

Show HN: LoRA gradients on Apple's Neural Engine at 2.8W

This article describes the training of a large language model called ANE-LoRA, which is a variation of the GPT-3 model. The authors provide details on the dataset, training process, and evaluations conducted to assess the model's performance.

Show HN: Claude skill to do your taxes

TL;DR Claude Code did my 2024 and 2025 taxes. Added a skill that anyone can use to do their own.

I tested it against TurboTax on my own 2024 and 2025 return. Same result without clicking through 45 minutes of wizard steps.

Would love PRs or feedback as we come up on tax season.

Learnings from replacing TurboTax with Claude

Skill looping The first iteration of my taxes took almost an hour and a decent amount of prompting. Many context compactions, tons of PDF issues, lots of exploration. When it was done, I asked Claude to write the skill to make it faster the next time (eg: Always check for XFA tags first when discovering form fields)

Skills aren’t just .MD files anymore Now they’re folders that can contain code snippets, example files, rules. In 2025 we were all building custom agents with custom tools. In 2026 every agent has its own code execution, network connection, and workspace. So it’s custom skills on the same agent (trending towards Claude Code or Cowork)

Show HN: Anchor Engine – Deterministic Semantic Memory for LLMs Local (<3GB RAM)

Anchor Engine is ground truth for personal and business AI. A lightweight, local-first memory layer that lets LLMs retrieve answers from your actual data—not hallucinations. Every response is traceable, every policy enforced. Runs in <3GB RAM. No cloud, no drift, no guessing. Your AI's anchor to reality.

We built Anchor Engine because LLMs have no persistent memory. Every conversation is a fresh start—yesterday's discussion, last week's project notes, even context from another tab—all gone. Context windows help, but they're ephemeral and expensive. The STAR algorithm (Semantic Traversal And Retrieval) takes a different approach. Instead of embedding everything into vector space, STAR uses deterministic graph traversal. But before traversal comes atomization—our lightweight process for extracting just enough conceptual structure from text to build a traversable semantic graph.

*Atomization, not exhaustive extraction.* Projects like Kanon 2 are doing incredible work extracting every entity, citation, and clause from documents with remarkable precision. That's valuable for document intelligence. Anchor Engine takes a different path: we extract only the core concepts and relationships needed to support semantic memory. For example, "Apple announced M3 chips with 15% faster GPU performance" atomizes to nodes for [Apple, M3, GPU] and edges for [announced, has-performance]. Just enough structure for retrieval, lightweight enough to run anywhere.

The result is a graph that's just rich enough for an LLM to retrieve relevant context, but lightweight enough to run offline in <3GB RAM—even on a Raspberry Pi or in a browser via WASM.

*Why graph traversal instead of vector search?*

- Embeddings drift over time and across models - Similarity scores are opaque and nondeterministic - Vector search often requires GPUs or cloud APIs - You can't inspect why something was retrieved

STAR gives you deterministic, inspectable results. Same graph, same query, same output—every time. And because the graph is built through atomization, it stays small and portable.

*Key technical details:*

- Runs entirely offline in <3GB RAM. No API calls, no GPUs. - Compiled to WASM – embed it anywhere, including browsers. - Recursive architecture – we used Anchor Engine to help write its own code. The dogfooding is real: what would have taken months of context-switching became continuous progress. I could hold complexity in my head because the engine held it for me. - AGPL-3.0 – open source, always.

*What it's not:* It's not a replacement for LLMs or vector databases. It's a memory layer—a deterministic, inspectable substrate that gives LLMs persistent context without cloud dependencies. And it's not a competitor to deep extraction models like Kanon 2; they could even complement each other (Kanon 2 builds the graph, Anchor Engine traverses it for memory).

*The whitepaper* goes deep on the graph traversal math and includes benchmarks vs. vector search: https://github.com/RSBalchII/anchor-engine-node/blob/d9809ee...

If you've ever wanted LLM memory that fits on a Raspberry Pi and doesn't hallucinate what it remembers—check it out, and I'd love your feedback on where graph traversal beats (or loses to) vector search.

We're especially interested in feedback from people who've built RAG systems, experimented with symbolic memory, or worked on graph-based AI.

Reddit discussion: https://www.reddit.com/r/LocalLLaMA/s/EoN7N3OyXK

Show HN: Poppy – A simple app to stay intentional with relationships

I built Poppy as a side project to help people keep in touch more intentionally. Would love feedback on onboarding, reminders, and overall UX. Happy to answer questions.

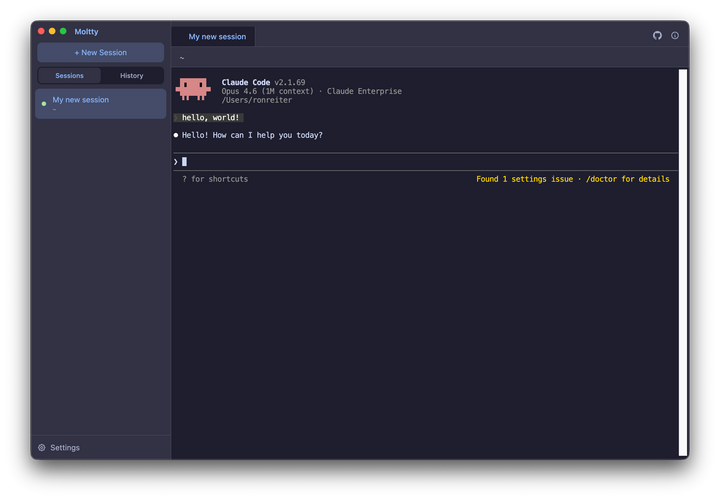

Show HN: Moltty – Organized, Persistent AI Coding Sessions

Moltty is a web-based platform that allows users to create, manage, and share interactive presentations and documents. The platform offers features such as real-time collaboration, multimedia integration, and various presentation templates to enhance the creation and delivery of digital content.

Show HN: ContextFlow – YouTube videos to viral social content (built by a 15yo)

I'm 15 and built ContextFlow AI — paste any YouTube URL and it generates viral Reddit posts, X threads, and LinkedIn content in seconds. Tech stack: React 19, FastAPI, Supabase, Groq (Llama 3.3 70B), Stripe. Free tier available — 5 generations/day. Would love feedback from HN.